LINUX as we know is a kernel and not an operating system, ships with several distributions like: Debian, Fedora, Ubuntu etc. and many more. Ubuntu OS developed by Mark Shuttleworth is popularly known and widely used by many. Also, being free and Open Source its new version is annually released which is contributed by thousands of developers who contribute to its development. But, how does it function? What all processes, list of events make it work and what is the significance of these processes?

This article would take you a bit deep into internals of Ubuntu OS that are very interesting and would help a novice have a complete understanding of its functioning.

Lay Down of the System

Linux has a process for its functioning, each and every system service including power management, boot up, system crash handling is a process which has a configuration file in “/etc/init” that describes event on which it will execute and corresponding event on which it would stop its execution, along with that it also maintains its other configuration files that describe its run-time behaviour in system’s “/etc/” directory, thus making system an event driven one.

If there are events generated then someone should be there to catch them and execute them?? Well obviously, the controller is our main process that exists as parent of all processes with process id 1 i.e. init. This is the process that starts with the system start up and never stops. This process only dies once the system is powered down as there is no process who is the parent of init.

Earlier versions of Ubuntu before 6.10 included old style sysvinit that was used to run scripts in “/etc/rcx.d” directory on every startup and shutdown of system. But, after that upstart system replaced the old style sysvinit system, but still provides backward compatibility to it.

Latest Ubuntu versions have this upstart system, but since its evolution from Ubuntu 6.10 it has gone several revisions current version being 1.13.2 as on 4th September 2014. The latest upstart system has 2 init processes, one for the system processes and other that manages the current logged in user session and exists only till the user is logged in, also called x-session init.

The whole system has been laid down as a hierarchical one, consisting of ancestor-child relationship throughout the power up to power down of the system.

For example: A small hierarchical relation between both the init processes is: system init(1) -> display manager(kernel space) -> display manager(user space) -> user init(or x-session init).

The configuration files for processes managed by system init reside in “/etc/init” and for those managed by session init reside in “/usr/share/upstart” (as per the current upstart versions above 1.12) and these configuration files are key to many unearthed secrets about processes as described in this article.

Getting more Deeper into the Hierarchy

Ubuntu recognizes two types of processes:

- Short lived jobs (or work-and-die jobs).

- Long lived jobs (or stay-and-work jobs).

The hierarchy that is made on the system is due to dependency relationship between processes which we can understand by viewing their configuration files. Let’s first start from a simple hierarchical relation between the processes that make the system to boot and understand the significance of each of them.

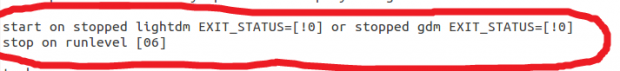

Boot Hierarchy

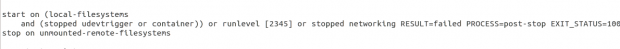

Init is the first process to start on powering on the system and is classified under work-and-stay job as it is never killed and only time the init is killed is on powering down i.e. init only dies and that too once per session and that is on powering down. On powering up, init generates the very first event on the system i.e. startup event. Each configuration file in “/etc/init” has two lines that define the event that causes the process to start and to stop. Those lines are as highlighted in the figure below:

This is a configuration file of a process failsafe-x and these start on and stop on conditions describe the event on which the process will start. On generation of startup event by init process those processes that have startup as their start on condition are executed in parallel and this only defines the hierarchy, and all the processes that execute on startup are children of init.

The processes that start on startup are listed as under and these are all work-and-die jobs:

1. hostname – This is a process that just tells the system of its hostname defined in /etc/hostname file.

2. kmod – Loads the kernel modules i.e. all the drivers from /etc/modules file.

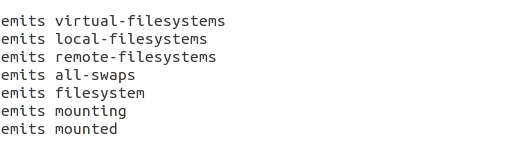

3. mountall – This process generates a lot of events and is mainly responsible for mouning all the filesystems on boot including local filesystems and remote filesystems.

The /proc file is also mounted by this very process and after all the mounting work the last event generated by it is filesystems event which further makes the hierarchy proceed further.

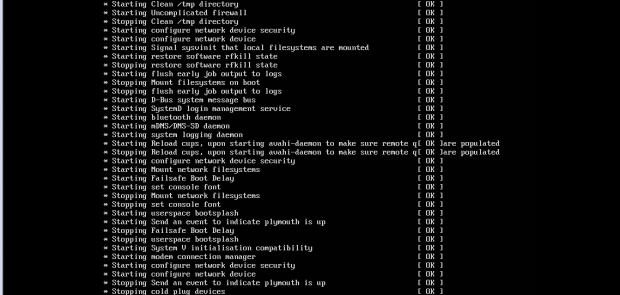

4. plymouth – This process executes on starting mountall and is responsible for showing that black screen that is seen on system startup showing something like below:

5. plymouth-ready – Indicates that plymouth is up.

Following are main process, other ones that also execute on startup includes, like udev-fallback-graphics, etc. Coming back to boot hierarchy, in a nutshell the events and processes that follow are as in sequence:

1. init along with generation of startup event.

2. mountall mounting file-systems, plymouth (along with starting mountall) displaying the splash screen, and kmod loading kernel modules.

3. local-filesystem event generated by mountall causing dbus to run. (Dbus is the system wide message bus which creates a socket letting other processes communicate to each other via sending messages to this socket and the receiver listens for the messages on this socket and filters the ones meant for it).

4. local-filesystem along with started dbus and static-network-up event caused by the process network that also runs on local-filesystem event causes network-manager to run.

5. virtual-filesystem event generated by mountall causes udev to run. (udev is the device manager for linux that manages hot-plugging of devices and is responsible for creating files in /dev directory and managing them too.) udev creates files for ram, rom etc in /dev directory ones the mountall has finished mounting virtual-filesystems and has generated the event virtual-filesystem signifying mounting of /dev directory.

6. udev causes upstart-udev-bridge to run that signifies that local network is up. Then after mountall has finished mounting the last filesystem and has generated filesystem event.

7. filesystem event along with static-network-up event causes rc-sysinit job to run. Here, comes the backward compatibility between older sysvinit and upstart…

9. rc-sysinit runs telinit command that tells the system runlevel.

10. After getting the runlevel, the init executes the scripts that start with ‘S’ or ‘K’ (starting jobs that have ‘S’ in beginning of their name and killing those having ‘K’ in beginning of their name) in the directory /etc/rcX.d (where ‘X’ is the current runlevel).

This small set of events cause system to start each time you power it on. And, this event triggering of processes is the only thing responsible for creating the hierarchy.

Now, another add-on to above is the cause of event. Which process causes which event is also specified in that same configuration file of the process as shown below in these lines:

Above is a section of configuration file of process mountall. This shows the events it emits. The name of event is one succeeding the word ‘event’. Event can be either the one defined in the configuration file as above or can be the name of process along with prefix ‘starting’ , ‘started’, ‘stopping’ or ‘stopped’.

So, Here we define two terms:

- Event Generator: One that has the line ‘emits xxx’ in its configuration file where xxx is the name of event it owns or generates.

- Event Catcher: One that has its start on or stop condition as xxx or that starts or stop on the event generated one of the Event generators.

Thus, the hierarchy follows and so the dependency between processes:

Event generator (parent) -> Event catcher (child)

Adding Complexity to the Hierarchy

Till now, you must have understood how the hierarchy of parent-child dependency between the processes is laid down by event triggering mechanism through a simple boot up mechanism.

Now, this hierarchy is never a one-to-one relationship having only one parent for one child. In this hierarchy we may have one or more parents for one child or one processes being a parent of more than one child. How this is accomplished?? Well the answer lies in the configuration files itself.

These lines are taken from process – networking and here the start on condition seems bit too complex composed of lots of events namely – local-filesystems, udevtrigger, container, runlevel, networking.

Local-filesystems is emitted by mountall, udevtrigger is the name of job, container event is emitted by container-detect, runlevel event emitted by rc-sysinit, and networking is again a job.

Thus, in a hierarchy the process networking is child of mountall, udevtrigger and container-detect as it can’t continue its functioning (functioning of the process is all the lines that are defined under script or exec sections in the configuration file of process) until the above processes generate their events.

Likewise, we can have one process being a parent of many if the event generated by one process is cached by many.

Distinguishing Job Types

As defined previously, we can have either short lived (or work-and-die jobs) or long lived (or stay-and-work) jobs but how to distinguish between them??

The jobs that have both ‘start on’ and ‘stop on’ conditions specified in their configuration files and have a word ‘task’ in their configuration file are work-and-die jobs that start on the generated event, execute their script or exec section (while executing, they block the events that caused them) and die afterwards releasing those events that they blocked.

Those jobs that do not have ‘stop on’ condition in their configuration file are long lived or stay-and-work jobs and they never die. Now the stay-and-work jobs can be classified further as:

- Those that do not have respawn condition and can be killed by root user.

- Those that have respawn condition in their configuration file and so they restart after being killed unless their work has been completed.

Conclusion

Thus, each process in LINUX is dependent on some and has some processes dependent on it and this relationship is many on many and is specified with the upstart system along with other details of the process.

Good coverage.

Should that not be “Low Down” rather than “Lay Down” ?

I feel that the “phantom boot” nature of ureadahead deserves a mention, it is a startup strategy rather than a persistent feature, so it may be out of discussion scope.

init deserves a nice tree graphic to illustrate.

no its lay down as it shows how the system is laid down i.e. comprising of init process and all…

And yes, you are right ureadahead should be there as it reads the files required for boot in advance of their use in the page cache. It starts when the mountall is just about to start and stops after all the old style sysvinit jobs are run..

Well done gunjan!! Really very good article on Ubuntu

Nicely written article!

thank you, this is a excellent article that explains the inner workings of Ubuntu

Really helpful, thanks